A Preflight Post: Expanding on Google Gemini 2.5 Pro

One of the core values of Continue has always been choice - the freedom to select the right model for the job. As I type this, I'm waiting for a load of laundry to dry so I can pack for Google Next 2025 so it seemed a fitting time to talk about Google's Gemini 2.5 Pro. And how it transformed one of my project's development workflows.

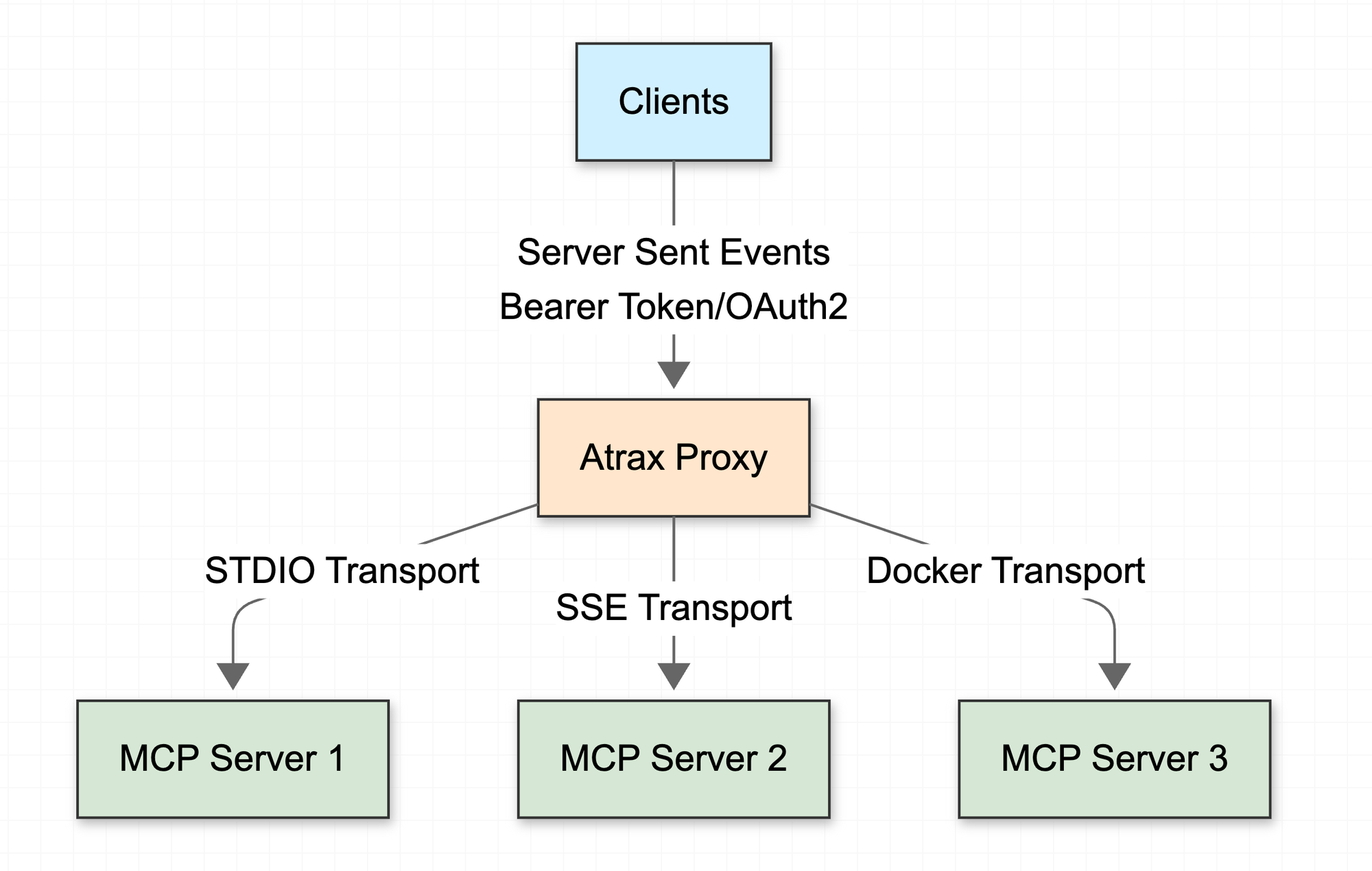

Since I've been working with Continue, I've gotten a lot more coding done. Recently, I've been working on Atrax, a Model Context Protocol (MCP) proxy. It frustrated me that many of the example MCP servers didn't have much in the way of security out of the box. And most were designed to be run by devs locally with individual credentials, PATs, etc., on boxes everywhere... So what if we could front unsecured MCP servers with something secure and only have to manage credentials in one place, secured by oauth2. Could it work?

The Context Challenge

When building Atrax, I was challenged by needing lots of context. For those new to working with large language models, "context length" refers to how much information a model can process at once - essentially its working memory.

Each token (roughly ¾ of a word in English) counts against this context limit, both for your input and the model's output. When you're working with complex codebases or technical documentation, these limits become a real pain.

While developing Atrax, I was constantly referencing the MCP Inspector and TypeScript SDK. I found myself struggling to keep the model on track with using the SDK rather than trying to reinvent serialization methods.

The more context I fed in, the more the model would wander, vary, or flip-flop in its approach. This was particularly annoying as it would suggest one implementation, which wouldn't work, then "fix" it with a completely different approach, which also wouldn't work, then circle back to the first approach again... rinse and repeat. I felt like I was stuck in an endless loop of almost-but-not-quite-working solutions.

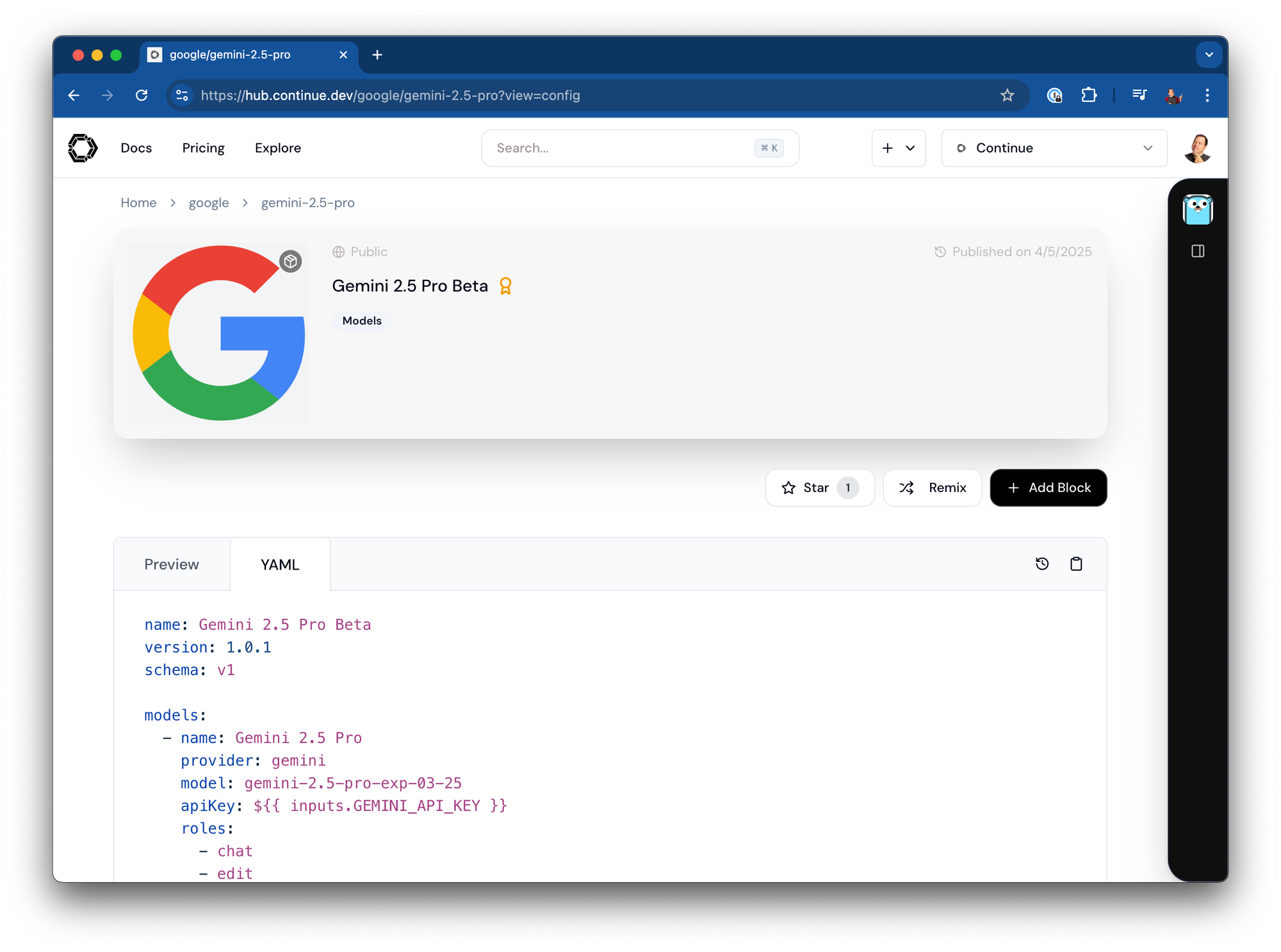

I noticed that Continue Hub had just added Gemini 2.5 Pro, so I decided to give it a try. The difference was immediately noticeable. With 5x the context length (around 1 million tokens compared to the 200k I was working with before), I could include comprehensive documentation and examples without sacrificing performance.

Beyond just raw context length, Gemini 2.5 Pro seemed to follow instructions more precisely. No more attempts to roll custom serialization logic when the SDK provided it. No more hardcoding test success pathways into the code.

Using Gemini 2.5 Pro with Continue

Here's how to get started:

- Visit Continue Hub and find the Gemini 2.5 Pro block

- Add it to your Continue assistant

- Set up an API key following Google's documentation

- Select it in your IDE of choice

That's it! Once configured, you can use all of Gemini's capabilities directly in your development workflow.

The Bigger Picture

The improvements in context handling and instruction following aren't just minor upgrades - they're changing how we code with AI. Being able to include more documentation, more code samples, and more detailed instructions fundamentally changes how these systems help us.

While every model has its strengths, having options like Gemini 2.5 Pro in your toolkit ensures you can select the right tool for specific tasks. That's what Continue is all about - empowering developers with choices.

At the end of the day, the goal remains the same: enabling developers to be amplified, not automated. By having access to models that better understand our intentions and can work with more context, we can focus on higher-level problems while letting AI handle more of the implementation details.

I'm excited to see how these capabilities continue to evolve and how they'll further transform our development workflows.

Join other Go programmers on our Discord to discuss whether Gemini 2.5 Pro is really better at Go or if it just feels like it. I'd love to hear your experiences!