Context for the Agent, Ownership for the Human: Why unowned AI contributions are breaking open source

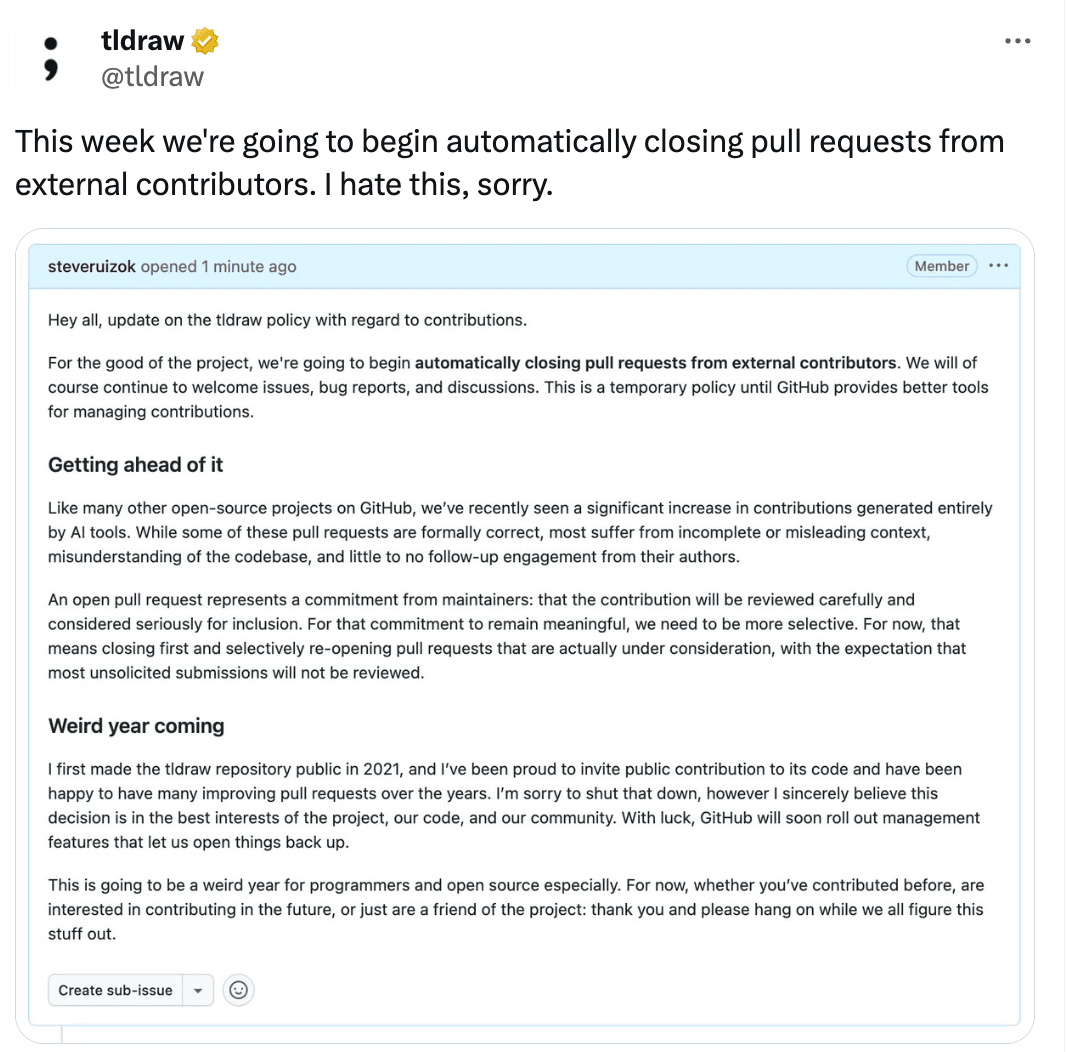

In the last year, we've seen a huge increase in AI generated pull requests on open source projects. Some of these PRs are formally correct. Some even pass tests. In some cases, maintainers are temporarily closing all external PRs to protect the project and themselves.

Open source isn’t turning against AI. Maintainers are pushing back against unowned execution.

It’s a rational response to a system under strain.

This isn’t a story about bad contributors or malicious automation. Many contributors are well-meaning. The problem is that AI has changed the shape of work faster than our contribution norms, workflows, and social contracts have evolved to support it. What used to be a manageable trickle of misunderstanding has become a flood.

The Problem with AI PRs in Open Source

We’ve been here before. Hacktoberfest spam forced maintainers to draw hard lines. Drive-by whitespace fixes and “Hello World” PRs made it obvious when a contribution had no intent behind it. Those were easy to close.

What’s different now is that AI-generated PRs don’t look obviously bad. They look fine. Tests pass. The code works.

Maintainers keep reporting the same thing: nobody is home. No follow-up. No understanding. No ownership. The real issue is how contributors are using AI. An AI-generated pull request with no accountable owner is indistinguishable from spam, even if the diff looks clean.

Every pull request implicitly asks a maintainer to evaluate:

- Does this fit the project’s architecture?

- Does it align with long-term goals?

- Does it reintroduce tradeoffs we’ve already rejected?

- Will this increase maintenance burden six months from now?

Historically, that context gap narrowed over time. Contributors learned project norms through prior PR feedback, discussion, and repeated interaction with maintainers. The cost of misunderstanding is amortized over time.

AI has made it cheap to produce code. It has not made it cheap to understand a project because understanding requires time and skill.

When maintainers push back on AI-generated PRs, what they’re really asking for is fewer surprises, earlier alignment, less repeated explanation, clear ownership and follow-through, and evidence the contributor (and their AI) reviewed the work before asking for maintainer time.

In other words, they want intent to be validated before implementation, and context to travel with the work. This is a systems demand.

Context as Configuration

We’ve seen this pattern before. When tooling lowers the cost of producing work without lowering the cost of reviewing it, the bottleneck moves and burnout follows.

If we look at how open source has adapted to past scaling pressures, a pattern emerges:

- Social norms → written guidelines

- Written guidelines → enforced checks

- Enforced checks → standardized formats

It’s reasonable to predict that AI-assisted contribution will follow the same path. In the near future, we’re likely to see:

- Project rules expressed in more structured, machine-readable forms

- Contribution standards that explicitly address AI use

- Pre-implementation validation becoming more common than post-hoc review

- Shared expectations about AI-assisted ownership and follow-up

Maintainers need to find leverage over cognitive load. In practice, this doesn’t require new standards or platforms.

We are moving toward a world where repository context is machine-readable by default. Whether it's a rules file for an AI agent or a specialized linter, the goal is the same: shift the rejection left**.** Catch the misalignment before it ever becomes a notification on the maintainer's phone.

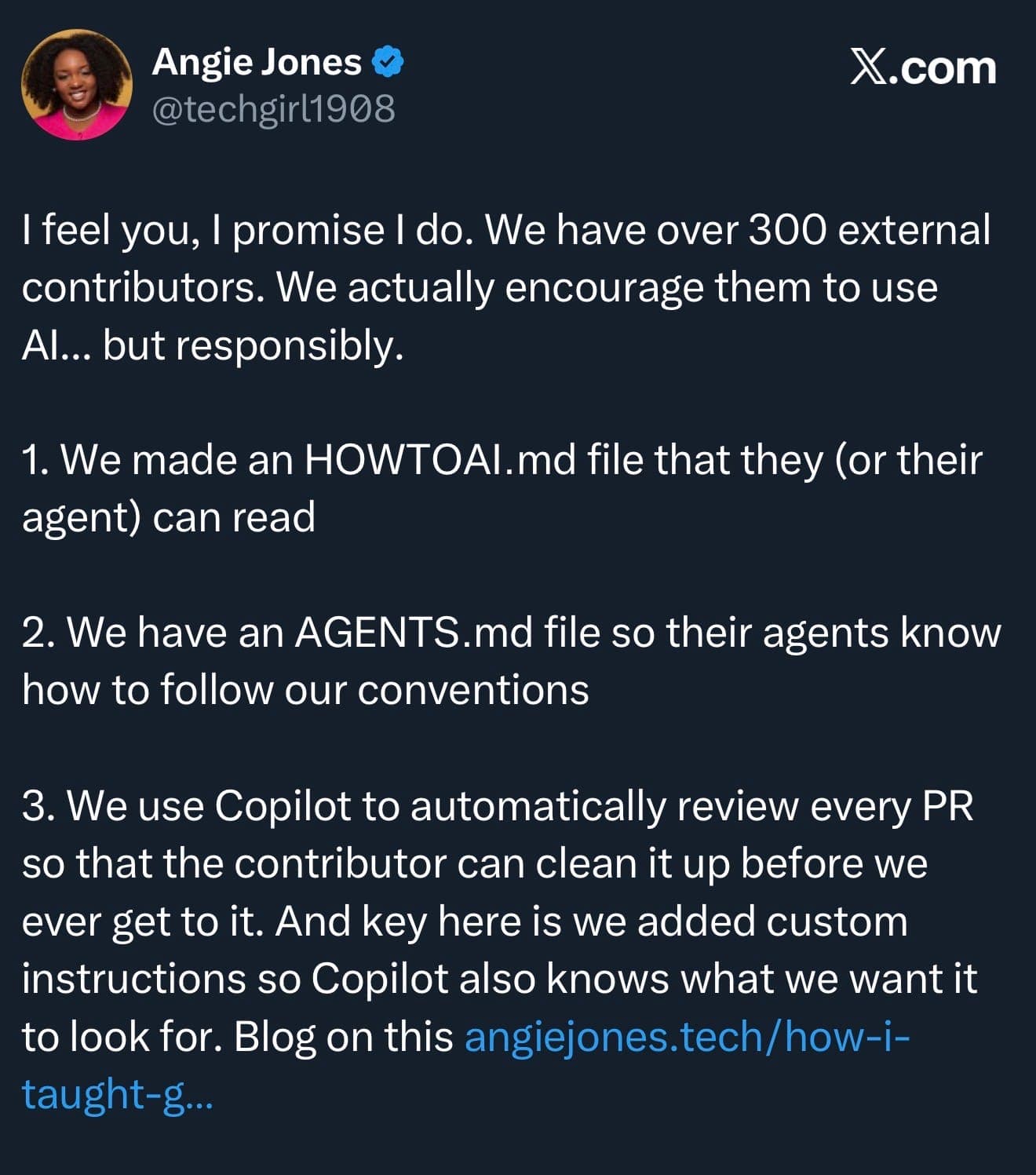

Angie Jones shares how Block is encouraging AI use by pairing it with explicit, machine-readable guidance and AI-based pre-review so contributors clean up their work before maintainers ever see it. The difference isn’t the presence of AI. It’s ownership, context, and follow-through.

Context Stewardship as Infrastructure

AI systems are not capable of “absorbing culture” unless that culture is expressed in a way they can consume. Documentation explains how things work. Context stewardship explains why things are the way they are.

When this information is only available through repeated explanation, it becomes a bottleneck. An .md file in the repo describing architectural constraints isn't just documentation, it's infrastructure for the AI Agent.

Restoring the Social Contract

For a long time, open source had an unspoken form of social pressure. When you opened a pull request, you were visible:

- Your name was attached to the change

- Your understanding (or misunderstanding) was on display

- You expected to answer questions

- You expected to learn

Submitting a PR wasn’t just a technical act. It was a social one.

You didn’t want to look careless, to waste maintainer time. You wanted to prove you were paying attention. That pressure wasn’t always kind, but it did create accountability.

The result is PRs where follow-up is slow, feedback is shallow, and ownership quietly disappears because the social contract has weakened.

Open source has always operated on an implicit contract:

If you submit the work, you own the consequences.

That contract still matters, and it needs to be restated explicitly in an AI-assisted world.

Using AI does not reduce responsibility. If anything, it increases it. If you didn’t write every line by hand, you still need to:

- Understand what the code does

- Explain why it belongs in the project

- Respond to review feedback

- Revise or withdraw the change if it’s wrong

AI can help you think. It cannot think for the project. This is also where social pressure re-enters the picture, not as gatekeeping, but as respect.

Clear norms and better tooling will solve most of this. But when contributors repeatedly ignore context, skip follow-up, or treat PRs as disposable, enforcement becomes necessary. Hacktoberfest disqualifies spam for a reason. Projects ban repeat offenders for a reason.

Consequences aren't for cruelty sake. They protecting the people who keep the project alive. An enforced code of conduct that includes contribution quality and accountability is not optional anymore. It’s overdue.

AI-assisted PRs still need Human Owners

From a contributor perspective, the realization needs to be clear:

Every PR must have a clearly accountable human owner regardless of how the code was generated.

That owner is responsible for context, follow-up, and revision.

For projects, turning culture, constraints, and context into infrastructure that any contributor—human or agent—can understand instantly can be the bridge into an AI landscape.

AI lowered the cost of producing code. Open source now has to rebalance by raising the clarity of responsibility. Contribution, even with AI, is still a relationship. The tools have changed, but the requirement for trust hasn’t. We just need to build the infrastructure to support it.