Fight Code Slop with Continuous AI

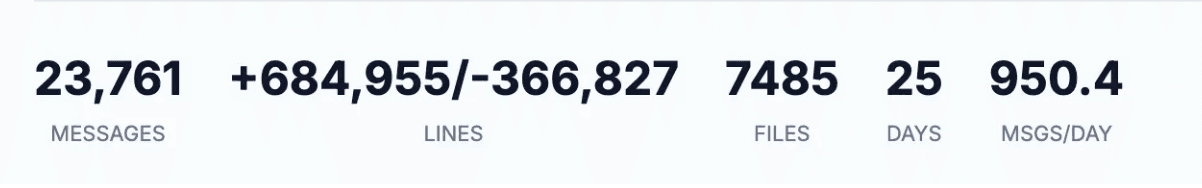

In the past 25 days I've generated close to 700,000 lines of code using Claude Code in parallel across git worktrees. I don't know how long it would take to actually read all that code, but I can assure you it's more time than I have.

I can also assure you that of those 700,000 lines, many (most?) of them were slop.

Duplicated logic, messy abstractions, useless comments, security vulnerabilities, inefficient database queries—the standard slop that even the smartest, most expensive agents inevitably produce unless you keep them on an extremely tight leash.

At Continue, our team fights slop together. We've encoded our shared standards into an agent that runs on every PR we open. We call it our Anti-Slop agent.

How the Anti-Slop Cloud Agent Works

The idea is simple: encode your team's standards into an automated cloud agent that runs before you ever see the PR. Turn your best practices into an AI reviewer.

Our Anti-Slop agent runs in CI on every PR. It looks at the changed files, identifies slop patterns, and cleans them up. If the code is already clean, it does nothing.

Here's the core of the prompt:

Follow the Anti AI-slop rule: clean up any AI-generated code patterns that harm readability and maintainability. Look at the files changed in this PR. If there are any with slop patterns, make targeted changes to clean them up. Stick to a single file maximum. If no changes are necessary, do nothing.

Then we specify what “slop” means to us, on our unique codebase. Things like n+1 queries with TypeORM or duplicated methods when the agent forgets to check our shared packages.

Why This Works

Off-the-shelf agents are great at static analysis to catch bugs. But as we delegate more programming work to agents, code review needs to go beyond bug-catching. We need to encode our team's knowledge and best practices into agents—the same way a senior engineer transmits hard-won knowledge of a codebase to a new junior engineer through the review process.

The problem is you can't shove all of that into the context window of a coding agent. A fresh agent with a new context window is both more reliable and cheaper than asking your coding agent to remember to clean up after itself, and it's easier for humans to review too. Looking at code through a narrow lens, one concern at a time, is more manageable than trying to hold everything in your head at once. The same is true for agents.

So you need a separate review step. Ideally broken out into discrete focus areas that your team owns and iterates on. Running it in CI means the code is solid regardless of whether anyone remembered to run the check locally—just like standard CI/CD best practices.

These agent checks don't replace human review. They’re meant to take care of tedious, nitpicky feedback that code reviews often fall into. Instead you get to focus on the things that actually matter, like system architecture.

This Is Continuous AI

This is what we mean by Continuous AI: agents that run automatically in response to signals from your development workflow and external environment. A PR opens, an agent reviews it. An alert fires, an agent triages it. Your tools emit signals; agents take action. You can read more about the levels of Continuous AI in our guide and in this blog post.

The Anti-Slop agent is one of the simplest examples, but the pattern scales. Security checks, performance audits, documentation updates, dependency reviews. Each one a discrete agent your team owns and iterates on.

The goal isn't to replace developers. It's to encode the work that's already happening into systems that run continuously, so humans can focus on the work that actually requires human judgment.

Try It Yourself

You can try the Anti-Slop agent yourself by clicking the link below and connecting your GitHub. It'll be added as a CI check on your repos and run on every PR to help you fight back against Slop.