It’s time to collect data on how you build software

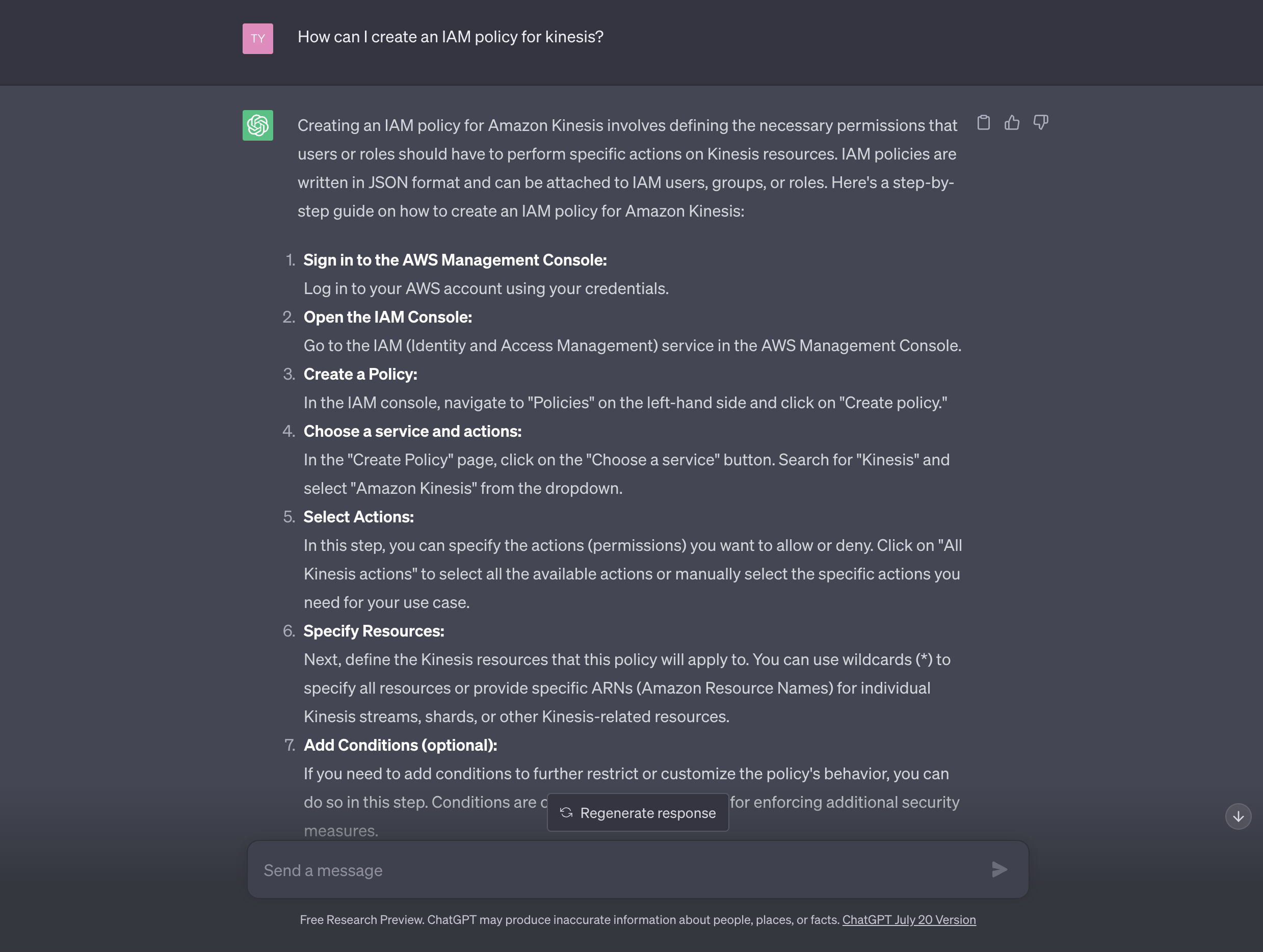

The next generation of developers use LLMs instead of Google + Stack Overflow

The next generation of developers is replacing Google + Stack Overflow with Large Language Models (LLMs), just as the generation before replaced reference manuals with Google + Stack Overflow. The organizations that retain and attract developers through this transition will

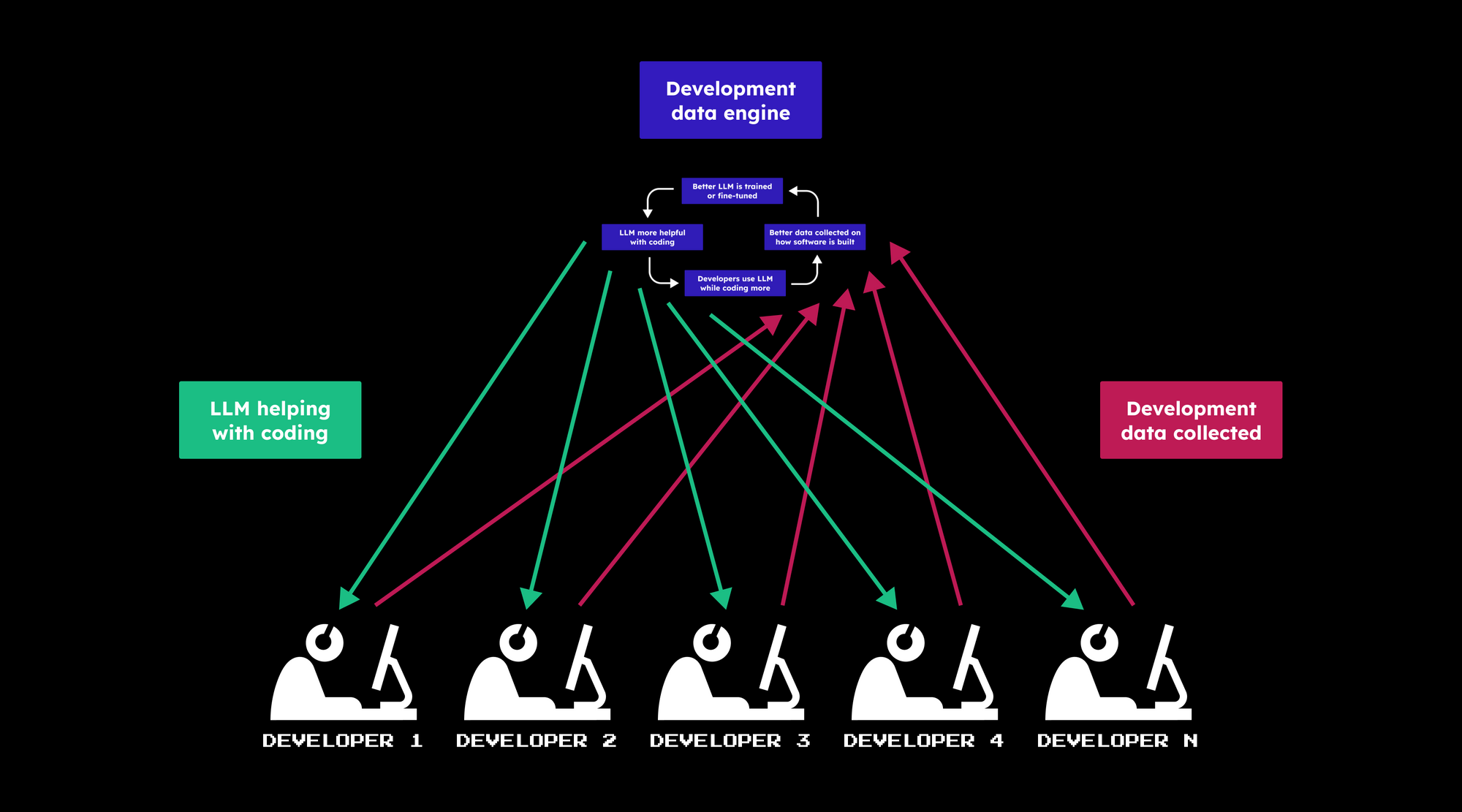

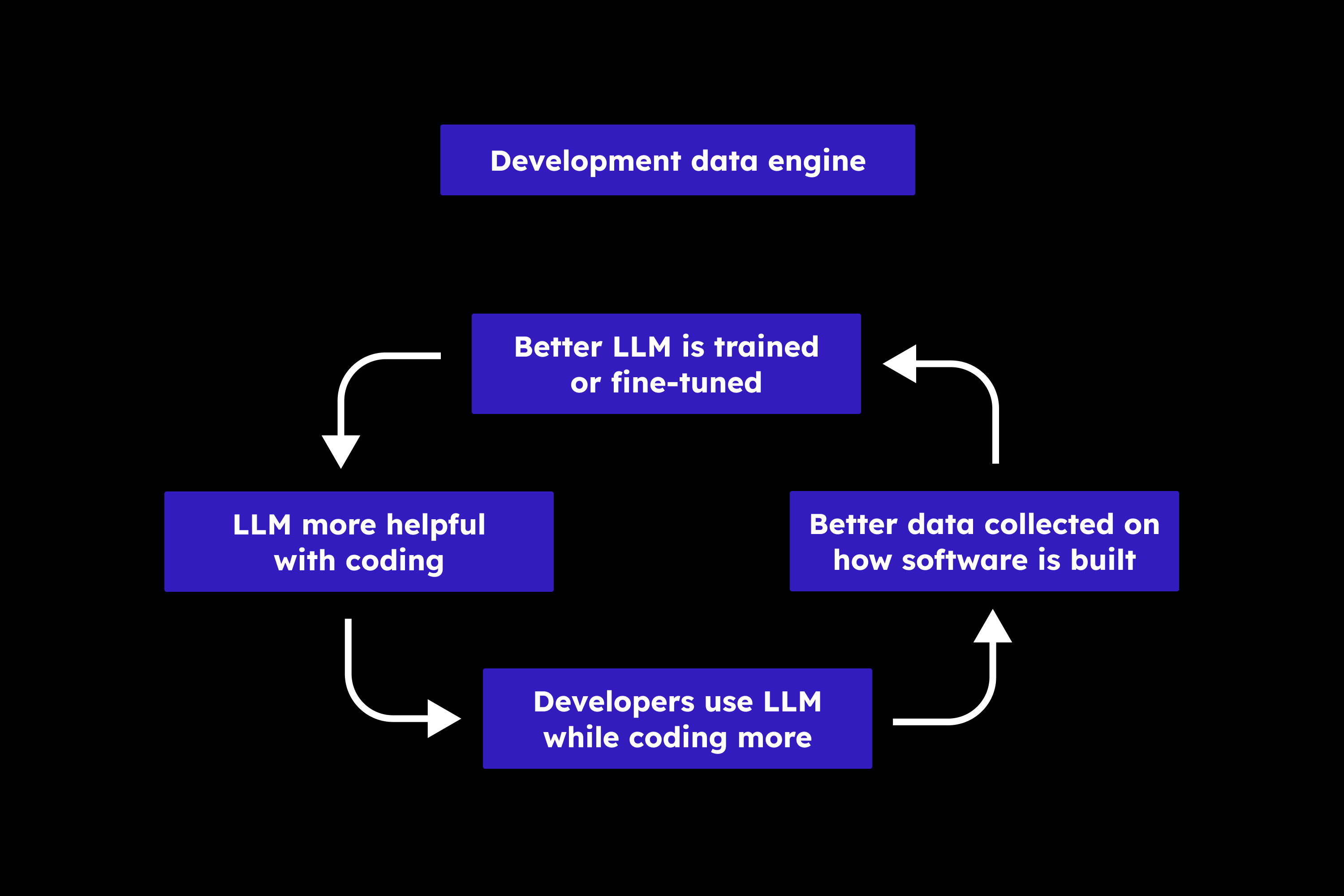

- First, understand how their developers use LLMs and demonstrate the ROI of their use by collecting development data—data on how their organization builds software

- Then, use this foundation to establish a development data engine—a continuous feedback loop that ensures the LLMs always have fresh information and code in their preferred style

Engineering organizations that grow their budget for LLMs by demonstrating ROI will retain their top developers. When new, talented developers are choosing between organizations, a big factor will be the quality of the LLMs available. Developers will join and remain where they are empowered by continuously improving LLMs—models that understand the latest about their codebase, projects, styles, best practices, and tech stack.

The first organizations realizing the need for better data are those that don’t use LLM APIs due to privacy and security concerns. They are deploying open-source LLMs on their own infrastructure, models which begin significantly less helpful than GPT-4 and need to be improved a lot. But even organizations using OpenAI are being confronted with both the need to advocate for budgets and data staleness (e.g. GPT-4 not knowing about v3 of a library because it was released in Nov 2021).

Your organization needs to collect data on how you build software

To understand and expand the use of LLMs on your team, you need to collect data on how your organization builds software. You already collect a lot of data: source code, Git versions, issues / tickets, PRs / MRs, discussions, logs, etc. Such data was instrumental in training the first coding-capable LLMs. However, this data is more about what was built and less about how it was built.

Making LLMs more helpful for your developers requires you to collect better data on what happens between Git commits. Existing data is generally missing three key aspects:

- The step-by-step process a developer takes to complete a task

- The context the developer uses to decide what to do at each step

- Natural language that explains the reasoning behind the steps

The good news is that as a by-product of LLM-aided development, such data is already being created. Say you have a developer, Hiro, on your team. To get suggestions, Hiro needs to gather context (e.g. code, docs, etc.) and give it to the LLM. As Hiro and the LLM interact, they will iteratively accomplish the task at hand, using a mix of code and natural language. This data, when combined with the code that Hiro ultimately commits, offers a rich description of how the software was built.

The bad news is that almost all developers are using LLMs through Copilot and ChatGPT, which means that basically no one besides GitHub and OpenAI is collecting this data. When Hiro does or does not hit tab after a Copilot suggestion, or when Hiro decides if code generated by ChatGPT should be copied, this signals to those products what they should do more or less of in the future. Even if Hiro edits the code later, the difference between the suggested generation and the final state can be used as a signal too.

There are still many open questions about how to best capture, structure, and apply this development data to create more helpful LLMs, but it’s clear that collecting implicit feedback from developers like Hiro to power a development data engine is key. Thanks to the hard work of individuals, their data can be used to improve LLMs, benefitting the entire team.

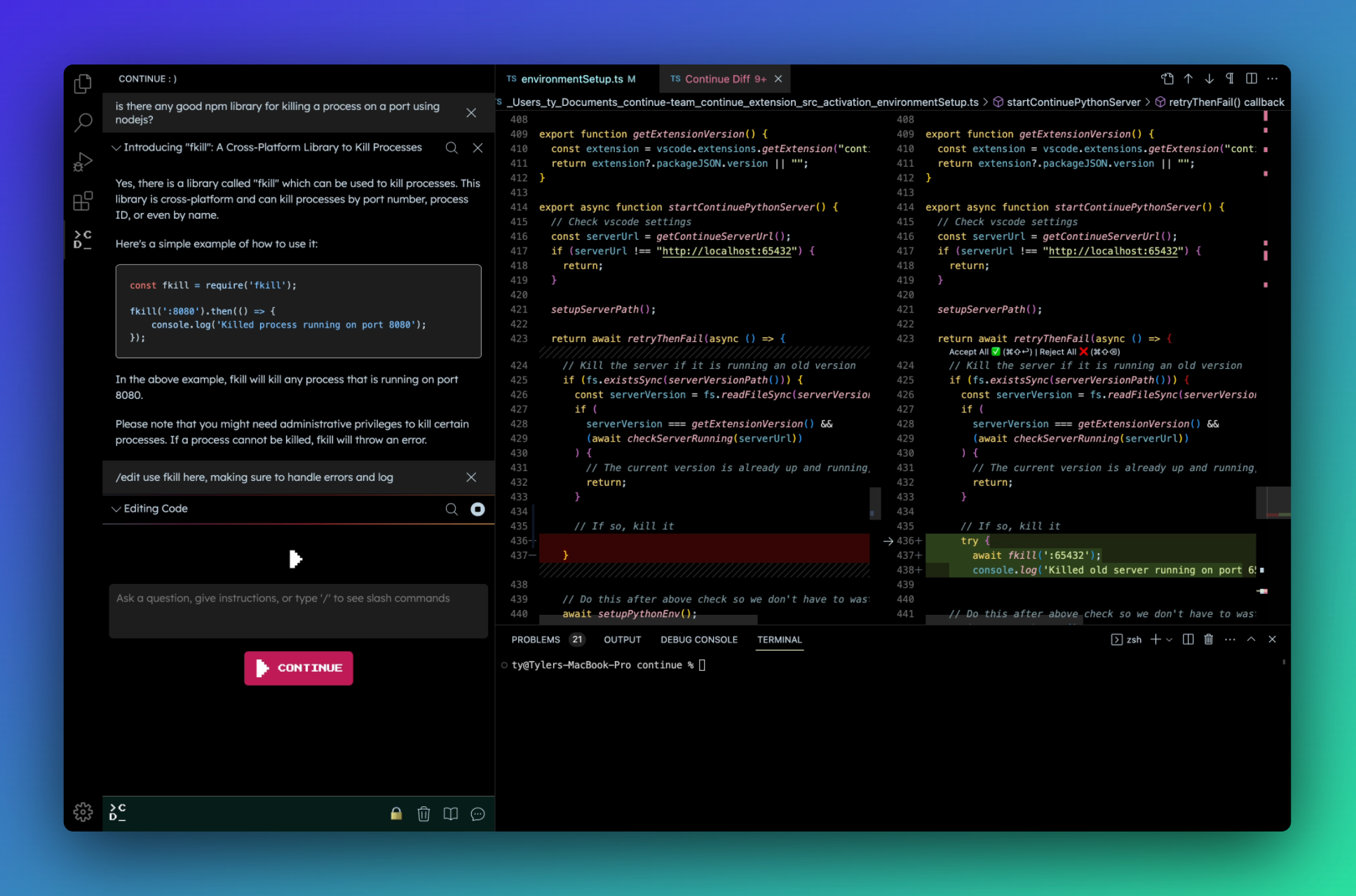

Continue helps you automatically collect this data

At Continue, we are on a mission to accelerate the creation of great software. But great means something different to each engineering team.

This is why we are building an open-source coding autopilot that allows you to automatically collect your development data. From this data, you can calculate the ROI of LLMs. You can get a better sense of what is frustrating your developers. And you can improve models to help them overcome these challenges.

We are working with forward-looking organizations to set up their development data engines. We begin by helping them extract, load, and transform their data, so they can gain visibility into how their developers use LLMs now and establish the foundation needed to improve LLMs later. If you are interested in working with us, please email us at data@continue.dev.

If you liked this blog post and want to read more about DevAI–the community of folks building software with the help of LLMs–in the future, join our monthly newsletter here.