Parallel Tool Calling: Making AI Agents Work Faster

By Patrick Erichsen, Software Engineer at Continue

If you've ever watched an AI agent slowly read file after file, one at a time, you know the pain. What should take seconds stretches into minutes as the model politely waits for each operation to complete before starting the next. It's like watching someone open browser tabs one by one instead of middle-clicking them all at once.

This is the problem I set out to solve with parallel tool calling in Continue. The result? Instead of sitting through five turns of conversation for five file reads, we do it all at once. For teams running agents at scale, this isn't just a nice-to-have—it's the difference between agents that feel responsive and ones that feel broken.

The Sequential Bottleneck

Let's say you ask an agent to analyze multiple files in your codebase. With traditional tool calling, here's what happens:

- Model: "I want to read file A"

- System: reads file A, sends content back

- Model: "Now I want to read file B"

- System: reads file B, sends content back

- Model: "Now I want to read file C"

- And so on...

Each step involves network latency and a full inference pass through the model. If you're reading five files, that's five round trips. In plan mode especially, where the model is essentially grepping around your codebase to understand the structure, this becomes painful fast.

How Parallel Tool Calling Works

The key insight is that many tool operations are independent. Reading multiple files doesn't require any particular order—the model can request all of them simultaneously.

With parallel tool calling, frontier models like GPT-4, Claude, and Gemini can now say:

"I want to read file A, file B, and file C all at the same time."

The model includes multiple tool calls in a single response. We parse all of them, execute them in parallel, and send all the results back in one go. What used to be five conversation turns becomes one.

// Before: Sequential tool calls

// Turn 1: read_file(fileA) → response

// Turn 2: read_file(fileB) → response

// Turn 3: read_file(fileC) → response

// After: Parallel tool calls

// Turn 1: [read_file(fileA), read_file(fileB), read_file(fileC)] → all responsesImplementation Deep Dive

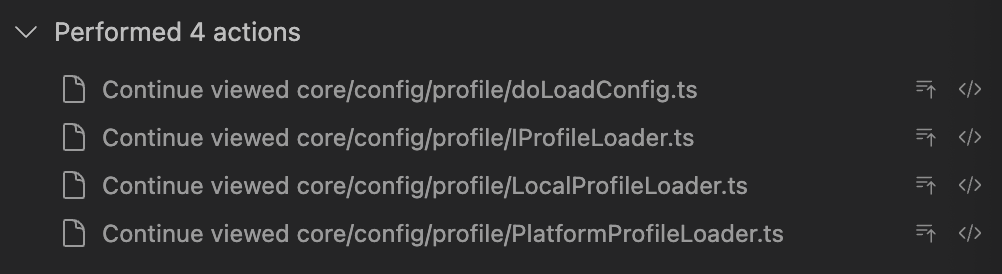

The technical challenge was that Continue's architecture was built around the assumption of one tool call per turn. I had to refactor the entire tool calling pipeline:

Message Structure Changes Instead of storing a single toolCallState, chat history items now store toolCallStates[] - an array that can handle multiple concurrent tool calls while maintaining backward compatibility.

Streaming Updates The streaming logic needed to handle multiple tool call deltas coming from the model simultaneously. Each tool call gets its own state machine, transitioning from "generating" to "generated" to "done" independently.

Permission Management Users need to approve/reject each tool call individually. The UI now shows separate approval buttons for each pending tool call, with clear messaging like "Continue wants to use read_file" for each operation.

API Compatibility Different providers format parallel tool calls differently. For Claude's API, we had to prevent message flattening that would lose individual toolCallId properties needed for the tool_result blocks.

Real-World Impact

I've seen this feature shine most in plan mode, where the model is exploring a codebase. Instead of the agent taking 30+ seconds to read through project files sequentially, it happens almost instantly.

The performance gains scale with the number of independent operations. For models that are smart enough to identify parallelizable work (which most frontier models are), you get roughly n-times speedup for n parallel operations.

Beyond the Big Players

What excites me most about this feature isn't just that it works with OpenAI, Anthropic, and Google models. It's that we're democratizing this capability.

If you're running IBM Granite behind your firewall, or some other model that doesn't have parallel tool calling built-in, Continue gives you this functionality out of the box. The model just needs to be smart enough to understand the concept—if it can follow instructions to include multiple tool calls in a response, our system handles the rest.

This is bigger than just Continue. We're an open-source project, so anyone can look at our implementation and adapt it. An enterprise that's building internal AI tooling doesn't need to implement parallel tool calling from scratch—they can learn from what we've built.

The Gradient Effect

One thing I've learned is that parallel tool calling isn't binary. It's not like "the model supports it or it doesn't." It's more of a gradient based on the model's instruction-following capabilities.

Highly capable models like GPT-4o or Claude Sonnet naturally understand when operations can be parallelized. They'll look at a request like "analyze these five files" and intelligently batch the read operations.

Less capable models might not grasp the nuances and default to sequential operations. But even then, if they do occasionally emit parallel tool calls, our system is ready to handle them.

Why it Matters for Continuous AI

Integrating AI into the development workflow is a key component of Continuous AI. It requires speed, context-awareness, and adaptability. Parallel tool calling supports this by:

- Reducing latency → If the AI can fetch context from multiple sources in parallel (codebase, tests, docs, etc.), it can deliver useful suggestions without slowing the developer down.

- Maintaining flow → Continuous AI only works if devs don’t feel interrupted. Parallelization keeps responses aligned with real-time editing and review.

- Handling complexity → Editing workflows often involve multiple locations, systems, and dependencies. Parallel calls let the AI gather all relevant signals in one pass rather than forcing sequential bottlenecks.

- Scaling context → In Continuous AI, you might want to check test status, linting errors, commit history, and security policies all at once. Parallel tool calls make that orchestration practical.

Looking Forward

This feature represents a broader trend in AI tooling: making agents actually usable in production. The difference between a demo that works in ideal conditions and a tool that teams rely on daily often comes down to performance details like this.

We're not done yet. There are still improvements to make around tool queuing, dependency detection between operations, and better UI feedback during parallel execution. But parallel tool calling gets us significantly closer to agents that feel as responsive as the tools developers already love.

If you want to try it out, parallel tool calling is available now in Continue. Just use a frontier model in agent mode and ask it to do multiple independent operations—you'll see the difference immediately.

The code is all open source at github.com/continuedev/continue, and you can find the specific implementation in PR #6524. We'd love to hear how it works for your team.

Continue is an open-source AI code assistant that brings the power of ChatGPT to your IDE. Learn more at continue.dev.