Simplifying AI Development with Model Context Protocol, Docker, and Continue Hub

A joint post from Continue and Docker exploring how to leverage containerized MCP blocks to enhance your AI native development experience

Introduction

As developers integrate AI into their workflows, they face several practical challenges: limited model context windows, insufficient tooling access, and difficulty connecting models to external data sources. The Model Context Protocol (MCP), an open protocol developed by Anthropic, addresses these limitations by providing a standardized way to connect large language models (LLMs) with external data sources and tools.

Continue and Docker have partnered to make MCP blocks easily accessible through Continue Hub, allowing developers to securely utilize these powerful components while maintaining the isolation, reproducibility, and supply chain security that containers provide.

What is the Model Context Protocol?

MCP solves a fundamental problem in AI development: how to give LLMs access to specific tools and data without rebuilding entire applications. It's a client-server protocol where:

- The client (like Continue) sends requests for specific information or actions

- MCP servers respond with the requested data or perform the specified actions

- The model can then use this information to provide more accurate, contextual responses

As Docker explains:

"MCP (Model Context Protocol), a new protocol open-sourced by Anthropic, provides standardized interfaces for LLM applications to integrate with external data sources and tools. With MCP, your AI-powered applications can retrieve data from external sources, perform operations with third-party services, or even interact with local filesystems."

In practical terms, this means your AI coding assistant can now directly interface with your PostgreSQL database, execute git commands, or retrieve documentation - without you having to copy/paste that information into prompts.

Why Use Docker for MCP Blocks?

Running MCP servers directly can present several challenges:

- Environment conflicts: Installing MCP servers often requires specific versions of Node.js, Python, and other dependencies that may conflict with existing installations

- Lack of isolation: Direct MCP server execution grants broad access to host files and resources

- Complex setup: Traditional setups require downloading, configuring, and managing code and environments

- Cross-platform issues: Ensuring consistency across different architectures and operating systems

- Dependency management: Safely encapsulating and distributing server-specific runtime dependencies

Docker addresses these challenges by containerizing MCP servers, providing:

- Isolation: Run MCP servers in contained environments without affecting your host system

- Reproducibility: Ensure consistent behavior across development, testing, and production

- Supply chain security: Scan and mirror verified container images through your organization's registry (JFrog Artifactory, AWS ECR, Azure Container Registry, Google Container Registry)

- Clean developer environments: Avoid polluting your system with random

uvornpxinstalls - Cross-platform compatibility: Run the same containers regardless of operating system or architecture

As David Soria Parra from Anthropic noted:

"Building an MCP server for ffmpeg would be a tremendously difficult undertaking without Docker. Docker is one of the most widely used packaging solutions for developers. The same way it solved the packaging problem for the cloud, it now has the potential to solve the packaging problem for rich AI agents."

Getting Started with Dockerized MCP Blocks in Continue Hub

Continue Hub now supports Docker-based MCP blocks, allowing you to leverage the full power of containerized MCP servers in your AI native development workflow. Let's explore two powerful examples: mcp/postgres and mcp/memory.

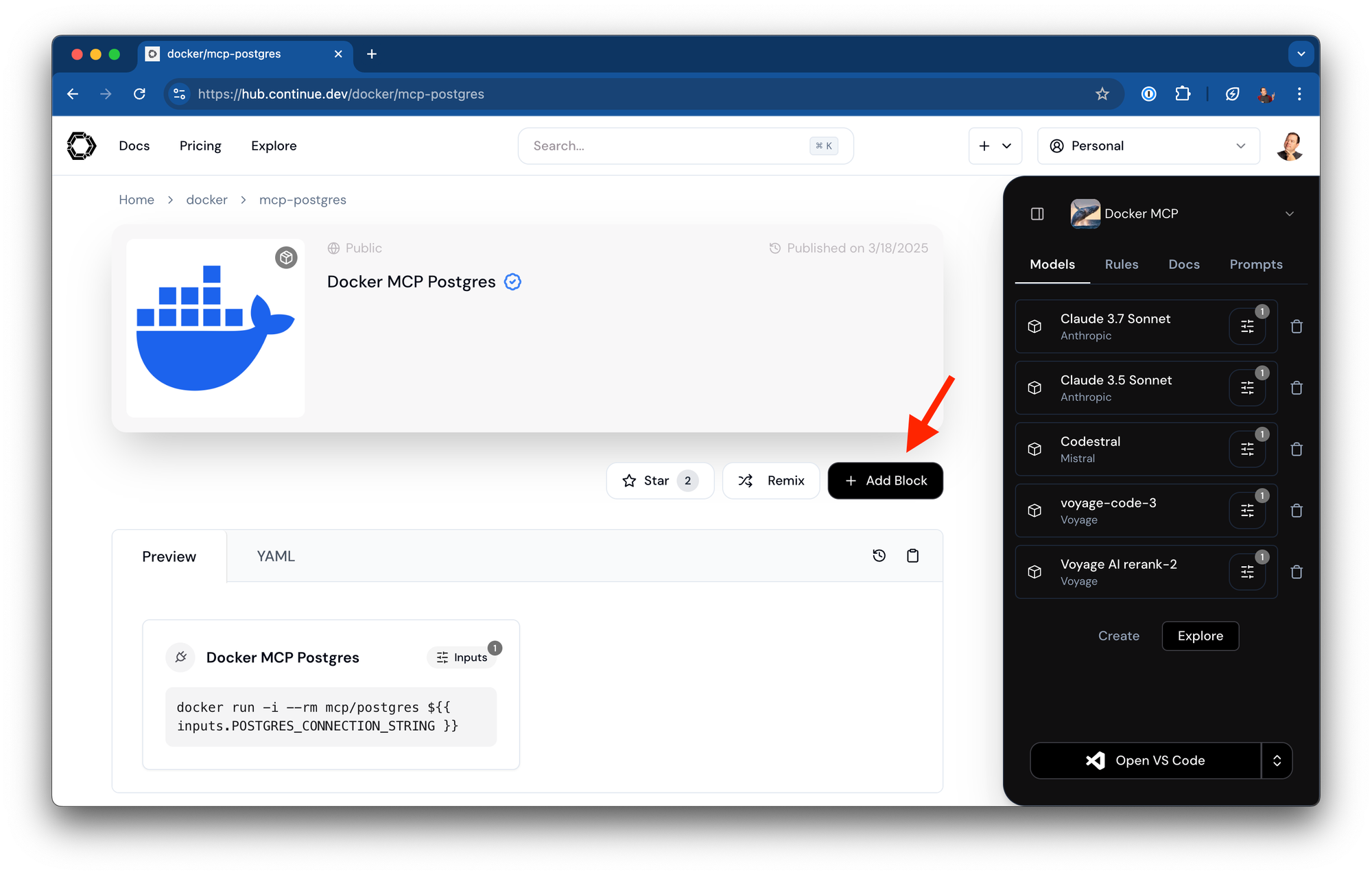

Example 1: Using mcp/postgres with Continue

The mcp/postgres MCP block allows your AI assistant to interact directly with PostgreSQL databases, enabling database-aware conversations, queries, and even schema modifications. We worked with Docker to publish mcp/postgres on Continue's Hub.

You can add this block to any assistant.

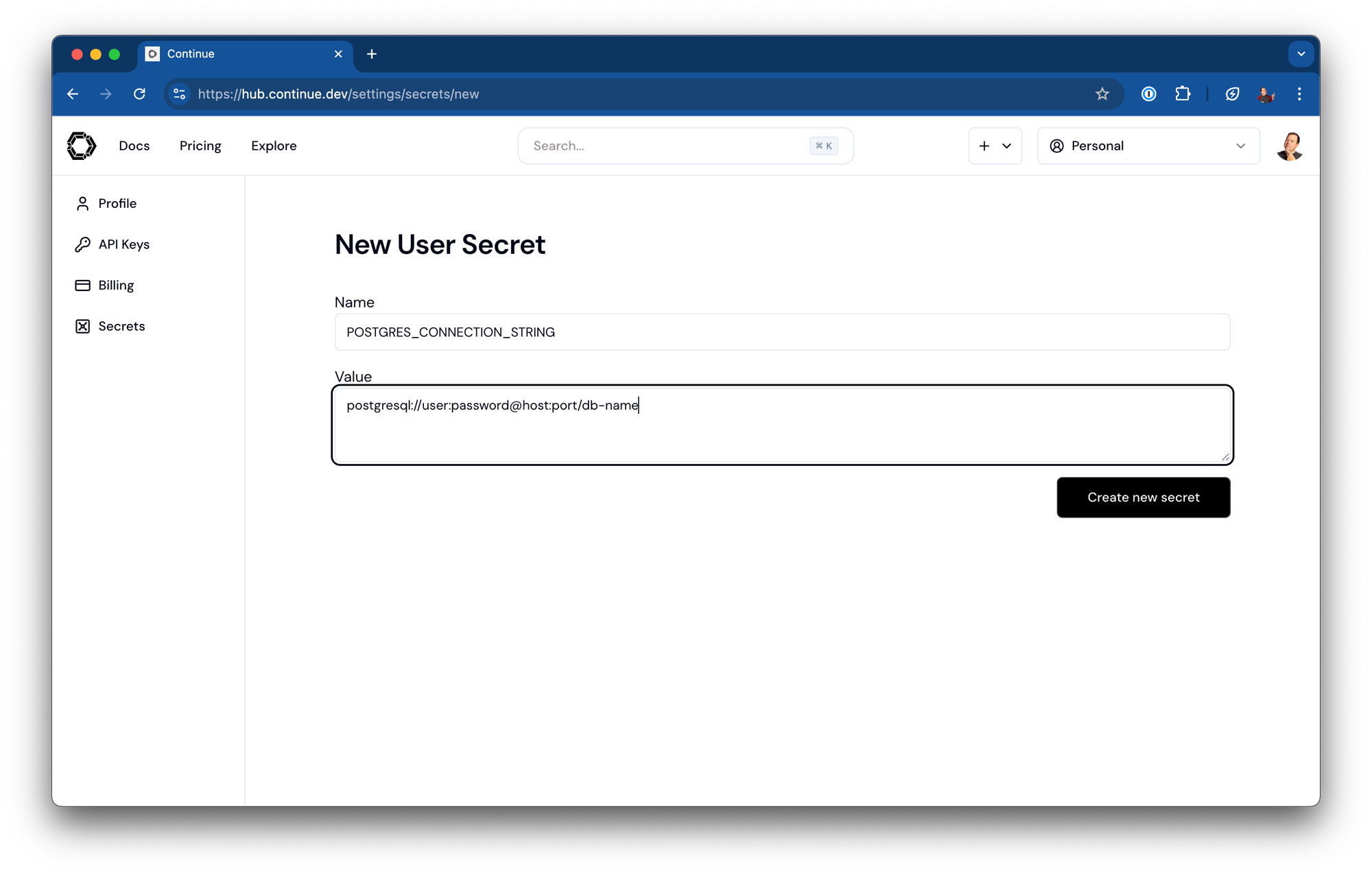

Then add a secret for the connection string.In the form:

postgresql://username:password@hostname:port/database

Make sure to save the assistant.

Configuration in Continue:

name: Docker MCP Postgres

version: 1.0.0

schema: v1

mcpServers:

- name: Docker MCP Postgres

command: docker

args:

- run

- -i

- --rm

- mcp/postgres

- postgresql://username:password@hostname:port/database

What You Can Do:

With the Postgres MCP block configured, you can ask Continue questions like:

- "Show me all tables in our users database"

- "Create a query to find all customers who placed orders in the last 30 days"

- "Help me optimize this slow query that's causing performance issues"

- "Generate the SQL to create a new table for storing user activity logs"

The AI will use the Postgres MCP server to connect to your database, inspect schemas, run exploratory queries, and provide contextually relevant code and explanations based on your actual data structure.

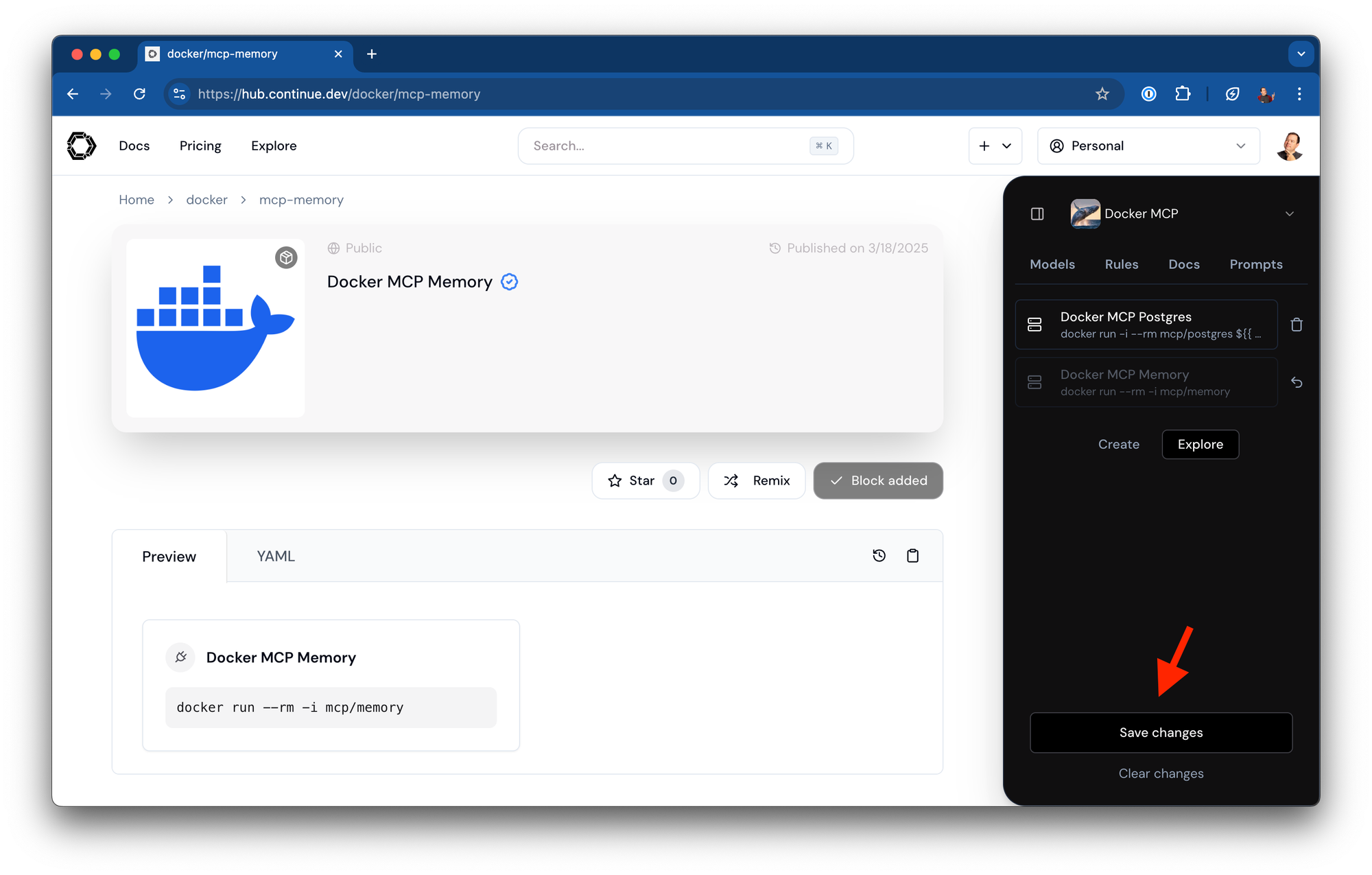

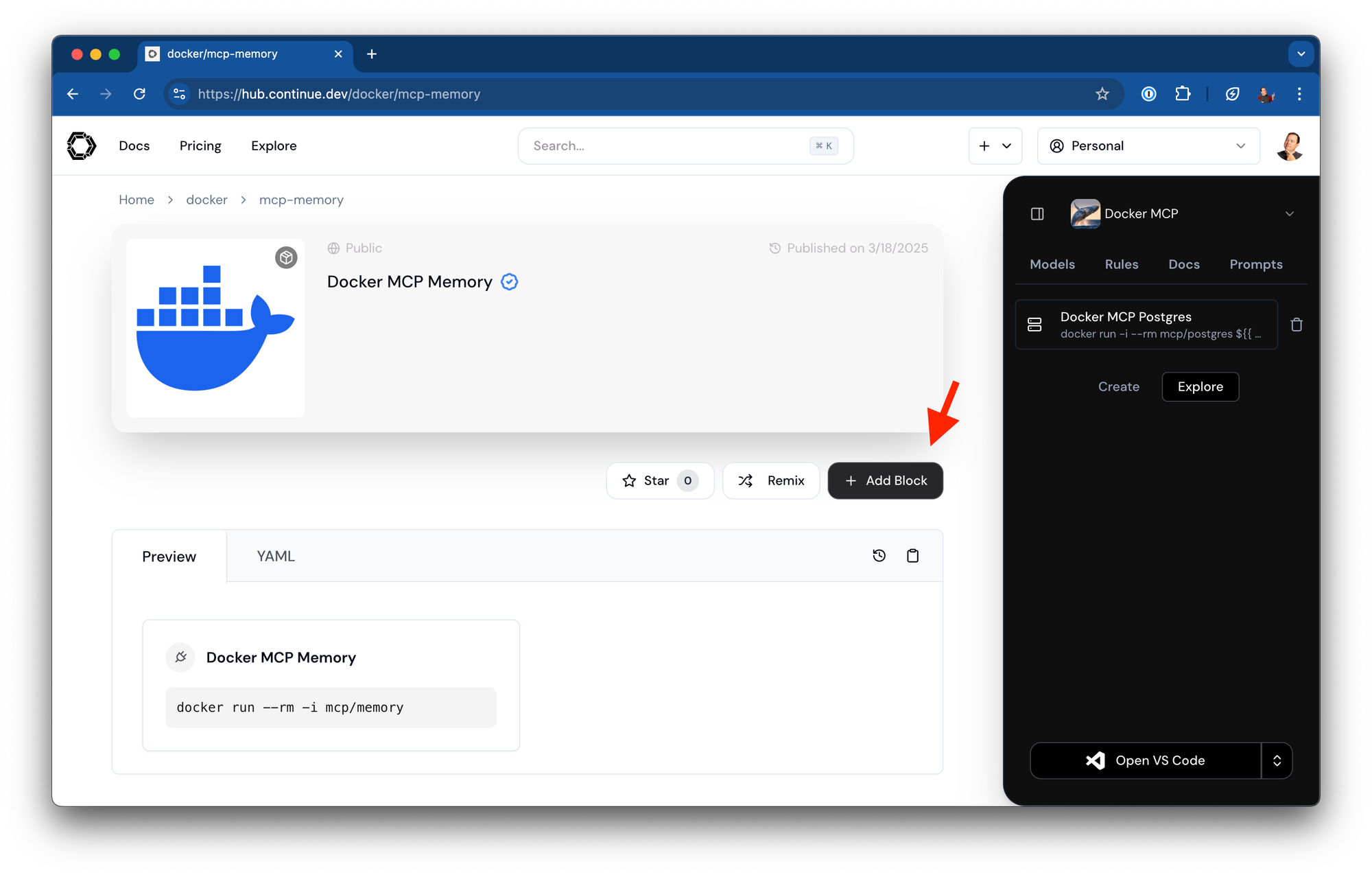

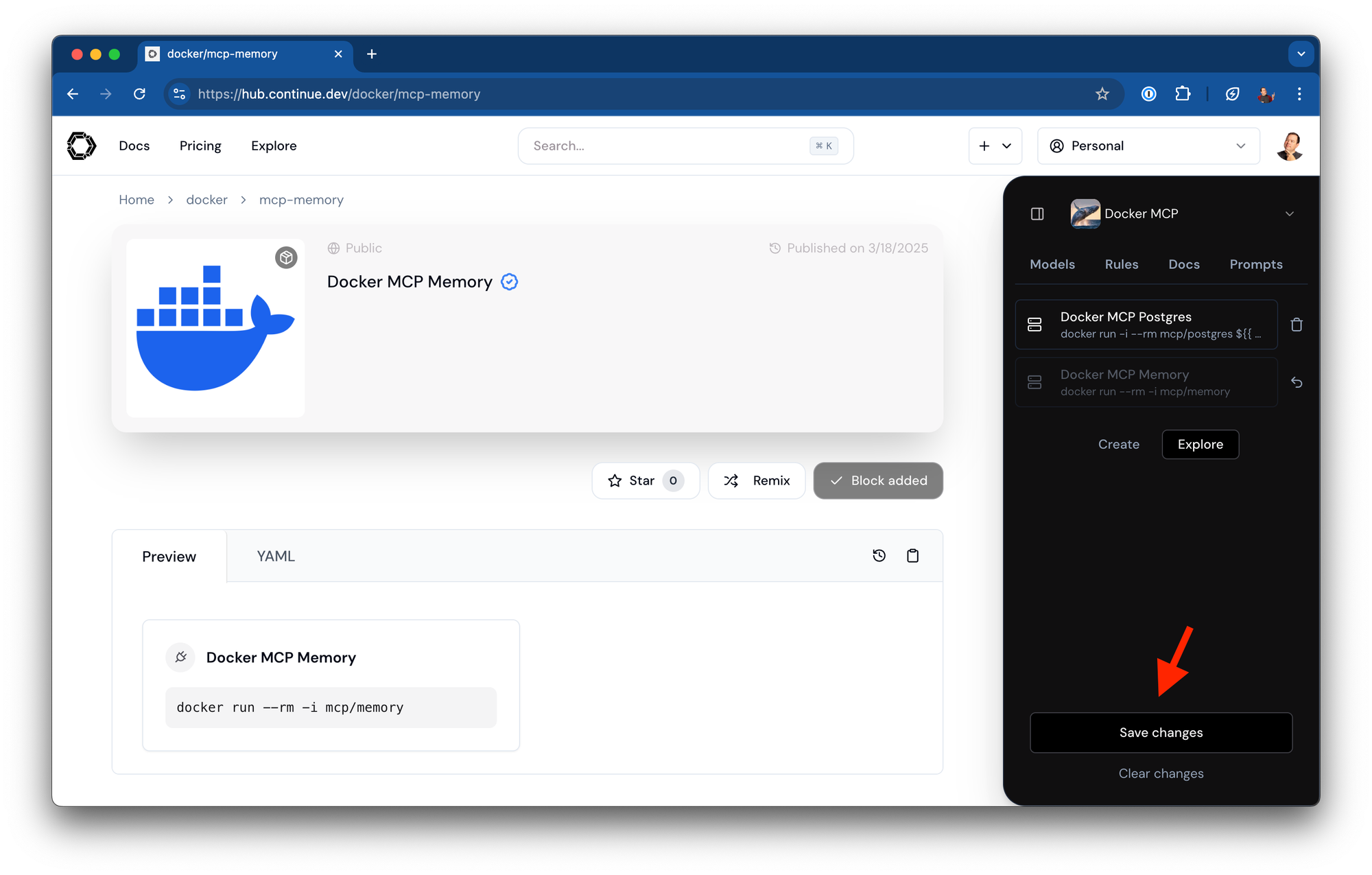

Example 2: Using mcp/memory with Continue

The mcp/memory MCP block provides persistent memory capabilities to your AI assistant, allowing it to store and retrieve important information across sessions.We also worked with Docker to publish mcp/memory on Continue's Hub.

Adding it to any assistant is as easy as clicking a button.

Make sure to save the assistant.

Configuration in Continue:

name: Docker MCP Memory

version: 1.0.0

schema: v1

mcpServers:

- name: Docker MCP Memory

command: docker

args:

- run

- --rm

- -i

- mcp/memory

What You Can Do:

With the Memory MCP block configured, you can:

- "Remember that our API authentication scheme uses JWT tokens with a 24-hour expiration"

- "What did I tell you about our database schema last week?"

- "Save this code snippet as our standard error handling template"

- "Recall our team's code review standards"

The memory block creates a persistent knowledge store that survives across sessions, making your AI assistant incrementally more valuable as it retains important context about your projects, preferences, and development patterns.

Benefits for Enterprise Development Teams

For enterprise development teams, containerized MCP blocks offer significant advantages:

- Enhanced security: Containers provide isolation and can be scanned for vulnerabilities

- Consistent environments: All developers use identical MCP server configurations

- Governance and compliance: Centralized container registries enable tracking and auditing

- Version control: Pin to specific MCP server versions to ensure reliability

- Supply chain integrity: Sign and verify container images to prevent tampering

Enterprise teams can mirror verified Docker images into internal registries, applying security scanning and ensuring compliance with organizational policies.

Moving Toward an AI Native Development Workflow

Many development teams are already integrating AI tools into their workflows, but are hitting limitations with current implementations. The combination of Continue Hub, MCP, and Docker containers helps overcome these barriers by:

- Allowing modular extension of AI capabilities with specific tools

- Standardizing how these extensions are packaged and distributed

- Maintaining security and isolation through container boundaries

- Enabling version control and governance of AI extensions

This aligns with the principles outlined in the amplified.dev manifesto:

"We believe in a future where developers are amplified, not automated... To create these systems, we each have our own needs, so we will need to create and tune our own reusable components."

By containerizing MCP blocks, developers can build exactly the AI-enhanced workflow they need for their specific projects and environments, rather than being limited to what a single vendor provides.

Getting Started

Ready to try containerized MCP blocks with Continue?

- Ensure Docker Desktop is installed on your system

- Set up Continue via VS Code or JetBrains

- Configure your desired MCP blocks using the patterns shown above

- Start building with the enhanced capabilities of your AI-powered development environment

For more information, check out:

Conclusion

Developers adopting AI tools are looking for secure, flexible solutions that fit into their existing workflows without creating new problems. By combining Continue Hub with dockerized MCP blocks, teams can:

- Extend their AI assistants with specific capabilities they need

- Maintain security boundaries between components

- Avoid dependency conflicts and environment pollution

- Standardize on tools that work across their organization

- Build an incrementally more valuable AI-enhanced development environment

If you're looking to improve your AI native development experience while maintaining the security and reproducibility your team requires, containerized MCP blocks provide a practical path forward.