The Hidden Cost of Tool Switching

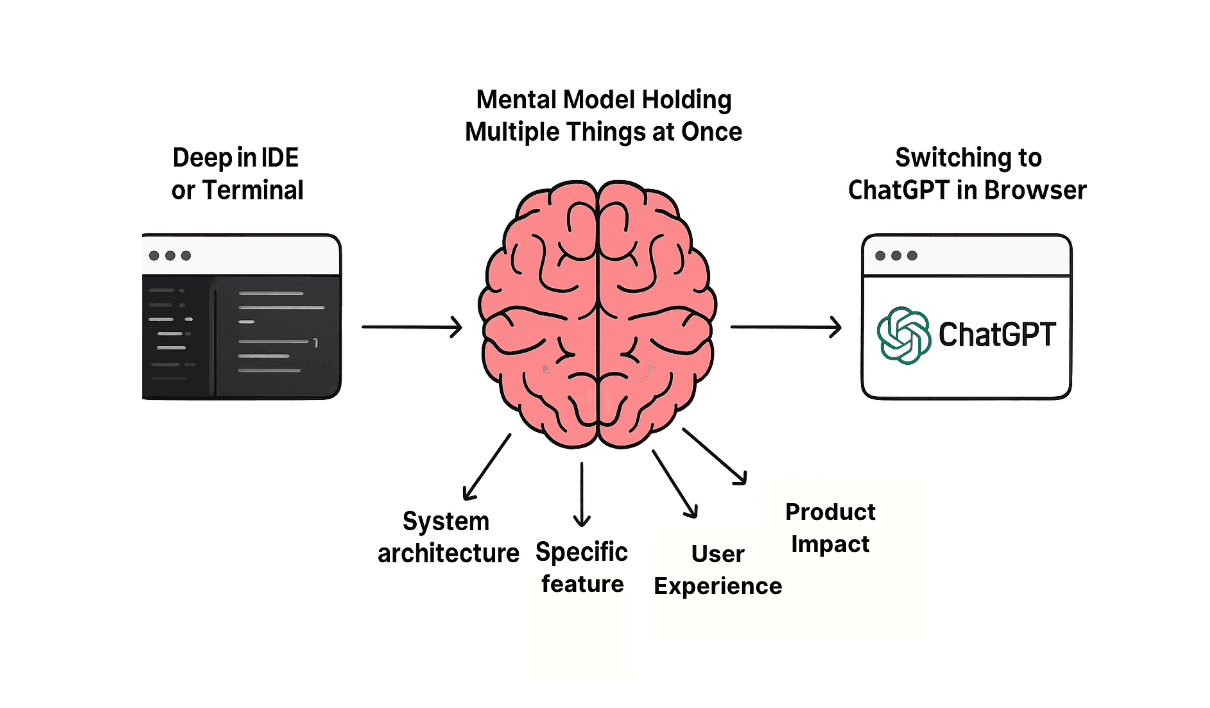

When you're deep in your IDE or terminal, working on a complex problem, your brain is holding multiple things at once: the system architecture, the feature you're building, the user experience, and the impact of your decisions on the project. This is all carefully balanced as a mental model when you're focusing. But when you're interrupted, even with something seemingly innocuous like switching to ChatGPT in your browser, your attention is broken and can take on average 23 minutes to get back to where you started.

Context switching can cost teams hundreds of dollars a day per developer. An interrupted task can take twice as long to complete and increase the possibility of errors as compared to an uninterrupted one.

Think about your typical workflow when using ChatGPT or another LLM for coding help. You're in VS Code, you hit a problem, you switch to your browser, you describe the issue to ChatGPT, you wait for a response, you copy the code, you switch back to VS Code, you paste it in, you test it. That's at least four context switches for a single interaction. And if the code doesn't work? You're doing it all over again.

Why This Matters More Than You Think

Research shows that interrupted coding sessions correlate with a real drop in code quality. When you step away from a project, even for a short break, you start to lose the mental map of how everything fits together. That loss of context makes it easier to miss details, accidentally re-create functionality that already exists, or introduce subtle bugs. The longer the break, the more likely it is that your next commit will have lower readability, more code smells, or extra technical debt creeping in.

The Flow State Problem

We adopt tools like ChatGPT to make us more productive, but if those tools require constant context switching, they might actually be making us less productive. A recent study of experienced open-source developers found that when developers were allowed to use AI tools like ChatGPT, they took 19% longer to complete tasks even though they believed AI was speeding them up by 20%.

The Solution: Integrate, Don't Separate

The answer isn't to stop using AI coding assistants. The answer is to use them in ways that minimize context switching. Tools like Continue Extensions that provide deep integration into the IDE create a frictionless workflow where AI assistance feels like a natural extension of the coding process, and developers avoid the productivity-draining context switch between their editor and a browser.

With Continue CLI and Agents, developers can take this a step further. Instead of pausing work to explain context to an external tool, you can run structured, repeatable AI actions directly from the command line or even in the background. Whether it’s kicking off a refactor, generating tests, or improving documentation, the model operates within your existing flow. This reduces the cognitive “reset tax” that comes from stepping outside your coding environment. This integration matters because it keeps you in your development environment rather than forcing you to shift mental contexts.

The Bottom Line

Context switching between your IDE and ChatGPT (or any other tool) is real, measurable, and costly. The research consistently shows that every switch taxes your cognitive resources, extends your task completion time, and introduces more errors into your code.

The best tools meet you where you already are. If an AI assistant forces you to constantly leave your development environment, it's creating friction even as it tries to help. And that friction adds up to hours of lost productivity every single week.

So yes, it's context switching. Yes, it impacts your productivity. And yes, there are actual studies showing the costs. Now, what are you going to do about it?